We were featured in The Hub Report!

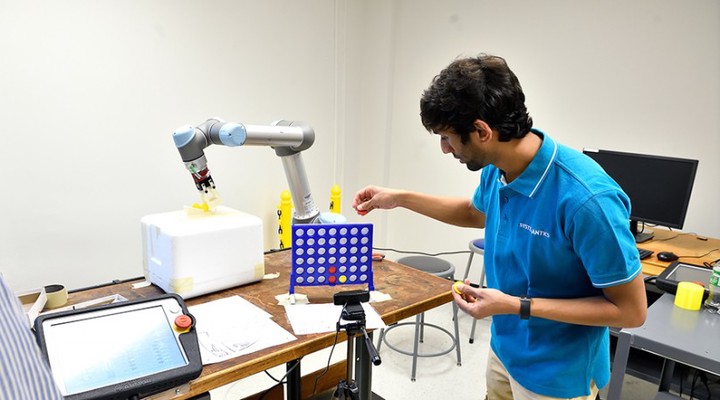

As a part of Robot Systems Programming course along with Aalap Shah, I developed a robotic system based off of UR5 and ROS, which is capable of playing Connect 4 collaboratively with humans.

First, I designed and printed an articulated gripper for the UR5 to pick up and hold the yellow circular tokens vertically from a custom fixture. For the control pipeline, we used ROS and MoveIt library to plan between different keypoints of pick and place. A ROS publisher-subscriber pair continually operates in the backend, and the robot moves after a motion signal from the CV/AI subsystems.

For the vision, a webcam facing the board along with OpenCV was used to identify the coloured edges of the board by simple thresholding in HSV space. This was also used to detect which token was placed at which location in the board, used for the AI subsystem. This structure operates as another ROS publisher-subscriber pair running in the background. Finally, the AI subsystem uses the detected current game state to decide the best move to be taken against the human and signals the robot to complete it.

Since the game is finite space, the AI always wins against the human.